A Neural Approach to Blind Motion Deblurring

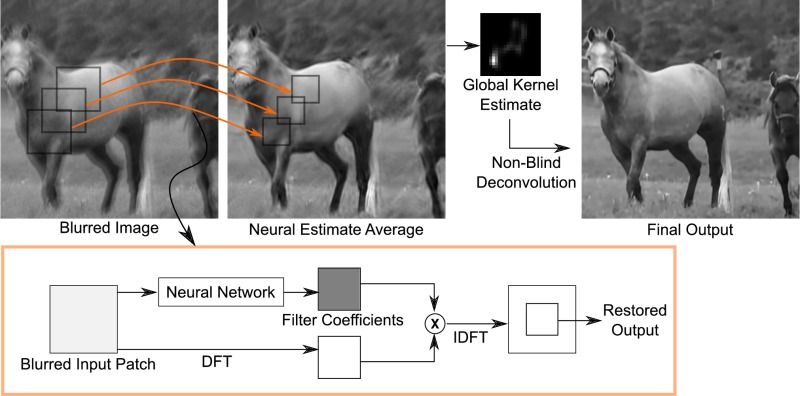

We present a new method for blind motion deblurring that uses a neural network trained to compute estimates of sharp image patches from observations that are blurred by an unknown motion kernel. Instead of regressing directly to patch intensities, this network learns to predict the complex Fourier coefficients of a deconvolution filter to be applied to the input patch for restoration. For inference, we apply the network independently to all overlapping patches in the observed image, and average its outputs to form an initial estimate of the sharp image. We then explicitly estimate a single global blur kernel by relating this estimate to the observed image, and finally perform non-blind deconvolution with this kernel. Our method exhibits accuracy and robustness close to state-of-the-art iterative methods, while being much faster when parallelized on GPU hardware.

| Publication | ECCV 2016 [arXiv] | |

| Downloads | Source Code | [GitHub] |

| Additional Visual Comparisons | [PDF: 5.2MB] | |

| Sun'13 Benchmark Output | [ZIP: 138 MB] | |

| Trained Neural Model | [MAT: 404 MB] | |

| (Plot of training & val losses) |

This site uses Google Analytics for visitor stats, which collects and processes visitor data and sets/reads cookies as described here.